2178: Refacto docker r=irevoire a=irevoire

closes#2166 and #2085

-----------

I noticed many people had issues with the default configuration of our Dockerfile.

Some examples:

- #2166: If you use ubuntu and mount your `data.ms` in a volume (as shown in the [doc](https://docs.meilisearch.com/learn/getting_started/installation.html#download-and-launch)), you can't run meilisearch

- #2085: Here, meilisearch was not able to erase the `data.ms` when loading a dump because it's the mount point

Currently, we don't show how to use the snapshot and dumps with docker in the documentation. And it's quite hard to do:

- You either send a big command to meilisearch to change the dump-path, snapshot-path and db-path a single directory and then mount that one

- Or you mount three volumes

- And there were other issues on the slack community

I think this PR solve the problem.

Now the image contains the `meilisearch` binary in the `/bin` directory, so it's easy to find and always in the `PATH`.

It creates a `data` directory and moves the working-dir in it.

So now you can find the `dumps`, `snapshots` and `data.ms` directory in `/data`.

Here is the new command to run meilisearch with a volume:

```

docker run -it --rm -v $PWD/meili_data:/data -p 7700:7700 getmeili/meilisearch:latest

```

And if you need to import a dump or a snapshot, you don't need to restart your container and mount another volume. You can directly hit the `POST /dumps` route and then run:

```

docker run -it --rm -v $PWD/meili_data:/data -p 7700:7700 getmeili/meilisearch:latest meilisearch --import-dump dumps/20220217-152115159.dump

```

-------

You can already try this PR with the following docker image:

```

getmeili/meilisearch:test-docker-v0.26.0

```

If you want to use the v0.25.2 I created another image;

```

getmeili/meilisearch:test-docker-v0.25.2

```

------

If you're using helm I created a branch [here](https://github.com/meilisearch/meilisearch-kubernetes/tree/test-docker-v0.26.0) that use the v0.26.0 image with the good volume 👍

If you use this conf with the v0.25.2, it should also work.

Co-authored-by: Tamo <tamo@meilisearch.com>

2298: Nested fields r=irevoire a=irevoire

There are a few things that I want to fix _AFTER_ merging this PR.

For the following RCs.

## Stop the useless conversion

In the `search.rs` I convert a `Document` to a `Value`, and then the `Value` to a `Document` and then back to a `Value` etc. I should stop doing all these conversion and stick to one format.

Probably by merging my `permissive-json-pointer` crate into meilisearch.

That would also give me the opportunity to work directly with obkvs and stops deserializing fields I don't need.

## Add more test specific to the nested

Everything seems to works but I should write tests to double check that the nested works well with the `formatted` field.

## See how I could stop iterating on hashmap and instead fill them correctly

This is related to milli. I really often needs to iterate over hashmap to see if a field is a subset of another field. I could probably generate a structure containing all the possible key values.

ie. the user say `doggo` is an attribute to retrieve. Instead of iterating on all the attributes to retrieve to check if `doggo.name` is a subset of `doggo`. I should insert `doggo.name` in the attributes to retrieve map.

Co-authored-by: Tamo <tamo@meilisearch.com>

2297: Feat(Search): Enhance formating search results r=ManyTheFish a=ManyTheFish

Add new settings and change crop_len behavior to count words instead of characters.

- [x] `highlightPreTag`

- [x] `highlightPostTag`

- [x] `cropMarker`

- [x] `cropLength` count word instead of chars

- [x] `cropLength` 0 is now considered as no `cropLength`

- [ ] ~smart crop finding the best matches interval~ (postponed)

Partially fixes #2214. (no smart crop)

Co-authored-by: ManyTheFish <many@meilisearch.com>

2271: Simplify Dockerfile r=ManyTheFish a=Thearas

# Pull Request

## What does this PR do?

1. Fixes#2234

2. Replace `$TARGETPLATFORM` with `apk --print-arch` to make Dockerfile available for `docker build` as well, not just `docker buildx` (inspired by [rust-lang/docker-rust](https://github.com/rust-lang/docker-rust/blob/master/1.59.0/alpine3.14/Dockerfile#L13))

PTAL `@curquiza`

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to Meilisearch!

Co-authored-by: Thearas <thearas850@gmail.com>

2277: fix(http): fix panic when sending document update without content type header r=MarinPostma a=MarinPostma

I found a panic when pushing documents without a content-type. This fixes is by returning unknown instead of crashing.

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2207: Fix: avoid embedding the user input into the error response. r=Kerollmops a=CNLHC

# Pull Request

## What does this PR do?

Fix#2107.

The problem is meilisearch embeds the user input to the error message.

The reason for this problem is `milli` throws a `serde_json: Error` whose `Display` implementation will do this embedding.

I tried to solve this problem in this PR by manually implementing the `Display` trait for `DocumentFormatError` instead of deriving automatically.

<!-- Please link the issue you're trying to fix with this PR, if none then please create an issue first. -->

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to Meilisearch!

Co-authored-by: Liu Hancheng <cn_lhc@qq.com>

Co-authored-by: LiuHanCheng <2463765697@qq.com>

2281: Hard limit the number of results returned by a search r=Kerollmops a=Kerollmops

This PR fixes#2133 by hard-limiting the number of results that a search request can return at any time. I would like the guidance of `@MarinPostma` to test that, should I use a mocking test here? Or should I do anything else?

I talked about touching the _nb_hits_ value with `@qdequele` and we concluded that it was not correct to do so.

Could you please confirm that it is the right place to change that?

Co-authored-by: Kerollmops <clement@meilisearch.com>

2267: Add instance options for RAM and CPU usage r=Kerollmops a=2shiori17

# Pull Request

## What does this PR do?

Fixes#2212

<!-- Please link the issue you're trying to fix with this PR, if none then please create an issue first. -->

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to Meilisearch!

Co-authored-by: 2shiori17 <98276492+2shiori17@users.noreply.github.com>

Co-authored-by: shiori <98276492+2shiori17@users.noreply.github.com>

2254: Test with default CLI opts r=Kerollmops a=Kerollmops

Fixes#2252.

This PR makes sure that we test the HTTP engine with the default CLI parameters and removes some useless internal CLI options.

Co-authored-by: Kerollmops <clement@meilisearch.com>

2280: Bump the milli dependency to 0.24.1 r=curquiza a=Kerollmops

We had issues with lindera recently, it was unable to download the official dictionaries from Google Drive and this was causing issues with our CIs (and other users' CIs too). The maintainer changed the source to download the dictionaries to get it from Sourceforge and it is much better and stable now.

This PR bumps the milli dependency to the latest version which includes the latest version of the tokenizer which, itself, includes the latest version of lindera, I advise that we rebase the currently opened pull requests to include this PR when it is merged on main.

Co-authored-by: Kerollmops <clement@meilisearch.com>

2263: Upgrade the config of the issue management r=curquiza a=curquiza

To reduce the feature feedback in the meilisearch repo

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

2253: refactor authentication key extraction r=ManyTheFish a=MarinPostma

I am concerned that the part of the code that performs the key prefix extraction from the jwt token migh be misused in the future. Since this is a critical part of the code, I moved it into it's own function. Since we deserialized the payload twice anyway, I reordered the verifications, and we now use the data from the validated token.

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2264: Import milli from meilisearch-lib r=Kerollmops a=Kerollmops

This PR directly imports the milli dependency used in _meilisearch-http_ from _meilisearch-lib_. I can't import _meilisearch-lib_ in _meiliserach-auth_ and that _meilisearch-auth_ can't use the milli exported by _meilisearch-lib_ 😞

Co-authored-by: Kerollmops <clement@meilisearch.com>

2245: Add test to validate cli r=irevoire a=MarinPostma

followup on #2242 and #2243

Add a test to make sure the cli is valid, and add a CI task to run the tests in debug to make sure we hit debug assertions.

FYI `@curquiza,` because of CI changes

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2244: chore(all): bump milli r=curquiza a=MarinPostma

continues the work initiated by `@psvnlsaikumar` in #2228

Co-authored-by: Sai Kumar <psvnlsaikumar@gmail.com>

2243: bug(http): fix panic on startup r=MarinPostma a=MarinPostma

this seems to fix#2242

I am not sure why this doesn't reproduce on v0.26.0, so we should remain vigilant.

`@curquiza` FYI

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2238: cargo: use resolver 2 r=MarinPostma a=happysalada

# Pull Request

## What does this PR do?

use resolver 2 from cargo.

This enables mainly to propagate the `--no-default-features` flag to workspace crates.

I mistakenly thought before that it was enough to have edition 2021 enabled. However it turns out that for virtual workspaces, this needs to be explicitely defined.

https://doc.rust-lang.org/edition-guide/rust-2021/default-cargo-resolver.html

This will also change a little how your dependencies are compiled. See https://blog.rust-lang.org/2021/03/25/Rust-1.51.0.html#cargos-new-feature-resolver for more details.

Just to give a bit more context, this is for usage in nixos. I have tried to do the upgrade today with the latest version, and the no default features flag is just ignored.

Let me know if you need more details of course.

## PR checklist

Please check if your PR fulfills the following requirements:

- [ ] Does this PR fix an existing issue?

- [ ] Have you read the contributing guidelines?

- [ ] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to Meilisearch!

Co-authored-by: happysalada <raphael@megzari.com>

2233: Change CI name for publishing binaries r=Kerollmops a=curquiza

Minor change regarding the CI job names. Should not impact the usage.

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

2204: Fix blocking auth r=Kerollmops a=MarinPostma

Fix auth blocking runtime

I have decided to remove async code from `meilisearch-auth` and let `meilisearch-http` handle that.

Because Actix polls the extractor futures concurrently, I have made a wrapper extractor that forces the errors from the futures to be returned sequentially (though is still polls them sequentially).

close#2201

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2197: Additions to 0.26 (Update actix-web dependency to 4.0) r=curquiza a=MarinPostma

- `@robjtede`

`@MarinPostma`

[update actix-web dependency to 4.0](3b2e467ca6)

From main to release-v0.26.0

Co-authored-by: Rob Ede <robjtede@icloud.com>

2194: update actix-web dependency to 4.0 r=irevoire a=robjtede

# Pull Request

## What does this PR do?

Updates Actix Web ecosystem crates to 4.0 stable.

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] ~~Does this PR fix an existing issue?~~

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Co-authored-by: Rob Ede <robjtede@icloud.com>

2192: Fix max dbs error r=Kerollmops a=MarinPostma

Factor the way we open environments to make sure they are always opened with the same options.

The issue was that indexes were first opened in snapshots with incorrect options, and heed cache returned an environment with incorrect open options on subsequent index open.

fix#2190

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2173: chore(all): replace chrono with time r=irevoire a=irevoire

Chrono has been unmaintained for a few month now and there is a CVE on it.

Also I updated all the error messages related to the API key as you can see here: https://github.com/meilisearch/specifications/pull/114fix#2172

Co-authored-by: Irevoire <tamo@meilisearch.com>

2171: Update LICENSE with Meili SAS r=curquiza a=curquiza

Check with thomas, we must put the real name of the company

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

2122: fix: docker image failed to boot on arm64 node r=curquiza a=Thearas

# Pull Request

## What does this PR do?

Fixes#2115.

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Co-authored-by: Thearas <thearas850@gmail.com>

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

2157: fix(auth): fix env being closed when dumping r=Kerollmops a=MarinPostma

When creating a dump, the auth store environment would be closed on drop, so subsequent dumps couldn't reopen the environment. I have added a flag in the environment to prevent the closing of the environment on drop when dumping.

Co-authored-by: ad hoc <postma.marin@protonmail.com>

2136: Refactoring CI regarding ARM binary publish r=curquiza a=curquiza

Fixes https://github.com/meilisearch/meilisearch/issues/1909

- Remove CI file to publish aarch64 binary and put the logic into `publish-binary.yml`

- Remove the job to publish armv8 binary

- Fix download-latest script accordingly

- Adapt dowload-latest with the specific case of the MacOS m1

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

Co-authored-by: meili-bot <74670311+meili-bot@users.noreply.github.com>

2005: auto batching r=MarinPostma a=MarinPostma

This pr implements auto batching. The basic functioning of this is that all updates that can be batched together are batched together while the previous batch is being processed.

For now, the only updates that can be batched together are the document addition updates (both update and replace), for a single index.

The batching is disabled by default for multiple reasons:

- We need more experimentation with the scheduling techniques

- Right now, if one task fails in a batch, the whole batch fails. We need more permissive error handling when processing document indexation.

There are four CLI options, for now, to interact with how the batch is scheduled:

- `enable-autobatching`: enable the autobatching feature.

- `debounce-duration-sec`: When an update is received, wait that number of seconds before batching and performing the updates. Defaults to 0s.

- `max-batch-size`: the maximum number of tasks per batch, defaults to unlimited.

- `max-documents-per-batch`: the maximum number of documents in a batch, defaults to unlimited. The batch will always contain a least 1 task, no matter the number of documents in that task.

# Implementation

The current implementation is made of 3 major components:

## TaskStore

The `TaskStore` contains all the tasks. When a task is pushed, it is directly registered to the task store.

## Scheduler

The scheduler is in charge of making the batches. At its core, there is a `TaskQueue` and a job queue. `Job`s are always processed first. They are *volatile* tasks, that is, they don't have a TaskId and are not persisted to disk. Snapshots and dumps are examples of Jobs.

If no `Job` is available for processing, then the scheduler attempts to make a `Task` batch from the `TaskQueue`. The first step is to gather new tasks from the `TaskStore` to populate the `TaskQueue`. When this is done, we can prepare our batch. The `TaskQueue` is itself a `BinaryHeap` of `Tasklist`. Each `index_uid` is associated with a `TaskList` that contains all the updates associated with that index uid. Each `TaskList` in the `TaskQueue` is ordered by the id of its first task.

When preparing a batch, the `TaskList` at the top of the `TaskQueue` is popped, and the tasks are popped from the list to make the next batch. If there are remaining tasks in the list, the list is inserted back in the `TaskQueue`.

## UpdateLoop

The `UpdateLoop` role is to perform batch sequentially. Each time updates are pushed to the update store, the scheduler is notified, and will in turn notify the update loop that work can be performed. When notified, the update loop waits some time to wait for more incoming update and then asks the scheduler for the next batch to perform and perform it. When it is done, the status of the task is put back into the store, and the next batch is processed.

Co-authored-by: mpostma <postma.marin@protonmail.com>

2120: Bring `stable` into `main` r=curquiza a=curquiza

I forgot to do it, tell me `@Kerollmops` or `@irevoire` if it's useful or not. I would say yes, otherwise I will have conflict when I will try to bring `main` into `stable` for the next release. Maybe I'm wrong

Co-authored-by: Irevoire <tamo@meilisearch.com>

Co-authored-by: mpostma <postma.marin@protonmail.com>

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: bors[bot] <26634292+bors[bot]@users.noreply.github.com>

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

2098: feat(dump): Provide the same cli options as the snapshots r=MarinPostma a=irevoire

Add two cli options for the dump:

- `--ignore-missing-dump`

- `--ignore-dump-if-db-exists`

Fix#2087

Co-authored-by: Tamo <tamo@meilisearch.com>

2086: feat(analytics): send the whole set of cli options instead of only the snapshot r=MarinPostma a=irevoire

Fixes#2088

Co-authored-by: Tamo <tamo@meilisearch.com>

2099: feat(analytics): Set the timestamp of the aggregated event as the first aggregate r=MarinPostma a=irevoire

2108: meta(auth): Enhance tests on authorization r=MarinPostma a=ManyTheFish

Enhance auth tests in order to be able to add new actions without changing tests.

Helping #2080

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: ManyTheFish <many@meilisearch.com>

2101: chore(all): update actix-web dependency to 4.0.0-beta.21 r=MarinPostma a=robjtede

# Pull Request

## What does this PR do?

I don't expect any more breaking changes to Actix Web that will affect Meilisearch so bump to latest beta.

Fixes #N/A?

<!-- Please link the issue you're trying to fix with this PR, if none then please create an issue first. -->

## PR checklist

Please check if your PR fulfills the following requirements:

- [ ] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to MeiliSearch!

Co-authored-by: Rob Ede <robjtede@icloud.com>

2095: feat(error): Update the error message when you have no version file r=MarinPostma a=irevoire

Following this [issue](https://github.com/meilisearch/meilisearch-kubernetes/issues/95) we decided to change the error message from:

```

Version file is missing or the previous MeiliSearch engine version was below 0.24.0. Use a dump to update MeiliSearch.

```

to

```

Version file is missing or the previous MeiliSearch engine version was below 0.25.0. Use a dump to update MeiliSearch.

```

Co-authored-by: Tamo <tamo@meilisearch.com>

2075: Allow payloads with no documents r=irevoire a=MarinPostma

accept addition with 0 documents.

0 bytes payload are still refused, since they are not valid json/jsonlines/csv anyways...

close#1987

Co-authored-by: mpostma <postma.marin@protonmail.com>

2068: chore(http): migrate from structopt to clap3 r=Kerollmops a=MarinPostma

migrate from structopt to clap3

This fix the long lasting issue with flags require a value, such as `--no-analytics` or `--schedule-snapshot`.

All flag arguments now take NO argument, i.e:

`meilisearch --schedule-snapshot true` becomes `meilisearch --schedule-snapshot`

as per https://docs.rs/clap/latest/clap/struct.Arg.html#method.env, the env variable is defines as:

> A false literal is n, no, f, false, off or 0. An absent environment variable will also be considered as false. Anything else will considered as true.

`@gmourier`

`@curquiza`

`@meilisearch/docs-team`

Co-authored-by: mpostma <postma.marin@protonmail.com>

2066: bug(http): fix task duration r=MarinPostma a=MarinPostma

`@gmourier` found that the duration in the task view was not computed correctly, this pr fixes it.

`@curquiza,` I let you decide if we need to make a hotfix out of this or wait for the next release. This is not breaking.

Co-authored-by: mpostma <postma.marin@protonmail.com>

2057: fix(dump): Uncompress the dump IN the data.ms r=irevoire a=irevoire

When loading a dump with docker, we had two problems.

After creating a tempdirectory, uncompressing and re-indexing the dump:

1. We try to `move` the new “data.ms” onto the currently present

one. The problem is that if the `data.ms` is a mount point because

that's what peoples do with docker usually. We can't override

a mount point, and thus we were throwing an error.

2. The tempdir is created in `/tmp`, which is usually quite small AND may not

be on the same partition as the `data.ms`. This means when we tried to move

the dump over the `data.ms`, it was also failing because we can't move data

between two partitions.

------------------

1 was fixed by deleting the *content* of the `data.ms` and moving the *content*

of the tempdir *inside* the `data.ms`. If someone tries to create volumes inside

the `data.ms` that's his problem, not ours.

2 was fixed by creating the tempdir *inside* of the `data.ms`. If a user mounted

its `data.ms` on a large partition, there is no reason he could not load a big

dump because his `/tmp` was too small. This solves the issue; now the dump is

extracted and indexed on the same partition the `data.ms` will lay.

fix#1833

Co-authored-by: Tamo <tamo@meilisearch.com>

2060: chore(all) set rust edition to 2021 r=MarinPostma a=MarinPostma

set the rust edition for the project to 2021

this make the MSRV to v1.56

#2058

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

When loading a dump with docker, we had two problems.

After creating a tempdirectory, uncompressing and re-indexing the dump:

1. We try to `move` the new “data.ms” onto the currently present

one. The problem is that if the `data.ms` is a mount point because

that's what peoples do with docker usually. We can't override

a mount point, and thus we were throwing an error.

2. The tempdir is created in `/tmp`, which is usually quite small AND may not

be on the same partition as the `data.ms`. This means when we tried to move

the dump over the `data.ms`, it was also failing because we can't move data

between two partitions.

==============

1 was fixed by deleting the *content* of the `data.ms` and moving the *content*

of the tempdir *inside* the `data.ms`. If someone tries to create volumes inside

the `data.ms` that's his problem, not ours.

2 was fixed by creating the tempdir *inside* of the `data.ms`. If a user mounted

its `data.ms` on a large partition, there is no reason he could not load a big

dump because his `/tmp` was too small. This solves the issue; now the dump is

extracted and indexed on the same partition the `data.ms` will lay.

fix#1833

2059: change indexed doc count on error r=irevoire a=MarinPostma

change `indexed_documents` and `deleted_documents` to return 0 instead of null when empty when the task has failed.

close#2053

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

2056: Allow any header for CORS r=curquiza a=curquiza

Bug fix: trigger a CORS error when trying to send the `User-Agent` header via the browser

`@bidoubiwa` thanks for the bug report!

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

2011: bug(lib): drop env on last use r=curquiza a=MarinPostma

fixes the `too many open files` error when running tests by closing the

environment on last drop

To check that we are actually the last owner of the `env` we plan to drop, I have wrapped all envs in `Arc`, and check that we have the last reference to it.

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

2036: chore(ci): Enable rust_backtrace in the ci r=curquiza a=irevoire

This should help us to understand unreproducible panics that happens in the CI all the time

Co-authored-by: Tamo <tamo@meilisearch.com>

2035: Use self hosted GitHub runner r=curquiza a=curquiza

Checked with `@tpayet,` we have created a self hosted github runner to save time when pushing the docker images.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

2037: test: Ignore the auths tests on windows r=irevoire a=irevoire

Since the auths tests fail sporadically on the windows CI but we can't reproduce these failures with a real windows machine we are going to ignore these ones.

But we still ensure they compile.

Co-authored-by: Tamo <tamo@meilisearch.com>

Since the auths tests fail sporadically on the windows CI but we can't

reproduce these failures with a real windows machine we are going to

ignore theses one.

But we still ensure they compile.

2033: Bug(FS): Consider empty pre-created directory as unexisting DB r=curquiza a=ManyTheFish

When the database directory was pre-created we were considering that DB is invalid, we are now accepting to create a database in it.

Co-authored-by: Maxime Legendre <maximelegendre@mbp-de-maxime.home>

2026: Bug(auth): Parse YMD date r=curquiza a=ManyTheFish

Use NaiveDate to parse YMD date instead of NaiveDatetime

fix#2017

Co-authored-by: Maxime Legendre <maximelegendre@mbp-de-maxime.home>

2025: Fix security index creation r=ManyTheFish a=ManyTheFish

Forbid index creation on alternates routes when the action `index.create` is not given

fix#2024

Co-authored-by: Maxime Legendre <maximelegendre@MacBook-Pro-de-Maxime.local>

2008: bug(lib): fix get dumps bad error code r=curquiza a=MarinPostma

fix bad error code being returned whet getting a dump status, and add a test

close#1994

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

2006: chore(http): rename task types r=curquiza a=MarinPostma

Rename

- documentsAddition into documentAddition

- documentsPartial into documentPartial

- documentsDeletion into documentDeletion

close#1999

2007: bug(lib): ignore primary if already set on document addition r=curquiza a=MarinPostma

Ignore the primary key if it is already set on documents updates. Add a test for verify behaviour.

close#2002

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

1989: Extend API keys r=curquiza a=ManyTheFish

# Pull Request

## What does this PR do?

- Add API keys in snapshots

- Add API keys in dumps

- fix QA #1979fix#1979fix#1995fix#2001fix#2003

related to #1890

Co-authored-by: many <maxime@meilisearch.com>

- Add API keys in snapshots

- Add API keys in dumps

- Rename action indexes.add to indexes.create

- fix QA #1979fix#1979fix#1995fix#2001fix#2003

related to #1890

1982: Set fail-fast to false in publish-binaries CI r=curquiza a=curquiza

This avoids the other jobs to fail if one of the jobs fails.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1908: Update CONTRIBUTING.md r=curquiza a=ferdi05

Added a sentence on other means to contribute. If we like it, we can add it in some other places.

# Pull Request

## What does this PR do?

Improves `CONTRIBUTING`

## PR checklist

Please check if your PR fulfills the following requirements:

- [ ] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to MeiliSearch!

Co-authored-by: Ferdinand Boas <ferdinand.boas@gmail.com>

Co-authored-by: Clémentine Urquizar - curqui <clementine@meilisearch.com>

1984: Support boolean for the no-analytics flag r=Kerollmops a=Kerollmops

This PR fixes an issue with the `no-analytics` flag that was ignoring the value passed to it, therefore a `no-analytics false` was just understood as a `no-analytics` and was effectively disabling the analytics instead of enabling them. I found [a closed issue about this exact behavior on the structopt repository](https://github.com/TeXitoi/structopt/issues/468) and applied it here.

I don't think we should update the documentation as it must have worked like this from the start of this project. I tested it on my machine and it is working great now. Thank you `@nicolasvienot` for this issue report.

Fixes#1983.

Co-authored-by: bors[bot] <26634292+bors[bot]@users.noreply.github.com>

Co-authored-by: Clément Renault <clement@meilisearch.com>

1978: Fix of `release-v0.25.0` branch into `main` r=curquiza a=curquiza

The fixes in #1976 should be on main to be taken into account by

```

curl -L https://install.meilisearch.com | sh

```

Co-authored-by: Yann Prono <yann.prono@nist.gov>

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

Co-authored-by: bors[bot] <26634292+bors[bot]@users.noreply.github.com>

1976: Fix download-latest.sh r=curquiza a=Mcdostone

# Pull Request

## What does this PR do?

Fixes#1975

The script was broken because `grep` matches the word **draft** in the changelog of [v0.25.0rc0](https://github.com/meilisearch/MeiliSearch/releases/tag/v0.25.0rc0)

> Misc

> Remove email address from the launch message (#1896) `@curquiza`

> Remove release drafter workflow (#1882) `@curquiza` ⚠️👀

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Thank you so much for contributing to MeiliSearch!

> Your product is awesome!

1977: Fix Dockerfile r=MarinPostma a=curquiza

Remove this error

<img width="830" alt="Capture d’écran 2021-12-07 à 17 00 46" src="https://user-images.githubusercontent.com/20380692/145063294-51ae2c50-2468-47e9-a891-542d824cad8e.png">

Co-authored-by: Yann Prono <yann.prono@nist.gov>

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1970: Use milli reexported tokenizer r=curquiza a=ManyTheFish

Use milli reexported tokenizer instead of importing meilisearch-tokenizer dependency.

fix#1888

Co-authored-by: many <maxime@meilisearch.com>

1965: Reintroduce engine version file r=MarinPostma a=irevoire

Right now if you boot up MeiliSearch and point it to a DB directory created with a previous version of MeiliSearch the existing indexes will be deleted. This [used to be](51d7c84e73) prevented by a startup check which would compare the current engine version vs what was stored in the DB directory's version file, but this functionality seems to have been lost after a few refactorings of the code.

In order to go back to the old behavior we'll need to reintroduce the `VERSION` file that used to be present; I considered reusing the `metadata.json` file used in the dumps feature, but this seemed like the simpler and more approach. As the intent is just to restore functionality, the implementation is quite basic. I imagine that in the future we could build on this and do things like compatibility across major/minor versions and even migrating between formats.

This PR was made thanks to `@mbStavola` and is basically a port of his PR #1860 after a big refacto of the code #1796.

Closes#1840

Co-authored-by: Matt Stavola <m.freitas@offensive-security.com>

Right now if you boot up MeiliSearch and point it to a DB directory created with a previous version of MeiliSearch the existing indexes will be deleted. This used to be prevented by a startup check which would compare the current engine version vs what was stored in the DB directory's version file, but this functionality seems to have been lost after a few refactorings of the code.

In order to go back to the old behavior we'll need to reintroduce the VERSION file that used to be present; I considered reusing the metadata.json file used in the dumps feature, but this seemed like the simpler and more approach. As the intent is just to restore functionality, the implementation is quite basic. I imagine that in the future we could build on this and do things like compatibility across major/minor versions and even migrating between formats.

This PR was made thanks to @mbStavola

Closes#1840

implements:

https://github.com/meilisearch/specifications/blob/develop/text/0085-api-keys.md

- Add tests on API keys management route (meilisearch-http/tests/auth/api_keys.rs)

- Add tests checking authorizations on each meilisearch routes (meilisearch-http/tests/auth/authorization.rs)

- Implement API keys management routes (meilisearch-http/src/routes/api_key.rs)

- Create module to manage API keys and authorizations (meilisearch-auth)

- Reimplement GuardedData to extend authorizations (meilisearch-http/src/extractors/authentication/mod.rs)

- Change X-MEILI-API-KEY by Authorization Bearer (meilisearch-http/src/extractors/authentication/mod.rs)

- Change meilisearch routes to fit to the new authorization feature (meilisearch-http/src/routes/)

- close#1867

1796: Feature branch: Task store r=irevoire a=MarinPostma

# Feature branch: Task Store

## Spec todo

https://github.com/meilisearch/specifications/blob/develop/text/0060-refashion-updates-apis.md

- [x] The update resource is renamed task. The names of existing API routes are also changed to reflect this change.

- [x] Tasks are now also accessible as an independent resource of an index. GET - /tasks; GET - /tasks/:taskUid

- [x] The task uid is not incremented by index anymore. The sequence is generated globally.

- [x] A task_not_found error is introduced.

- [x] The format of the task object is updated.

- [x] updateId becomes uid.

- [x] Attributes of an error appearing in a failed task are now contained in a dedicated error object.

- [x] type is no longer an object. It now becomes a string containing the values of its name field previously defined in the type object.

- [x] The possible values for the type field are reworked to be more clear and consistent with our naming rules.

- [x] A details object is added to contain specific information related to a task payload that was previously displayed in the type nested object. Previous number key is renamed numberOfDocuments.

- [x] An indexUid field is added to give information about the related index on which the task is performed.

- [x] duration format has been updated to express an ISO 8601 duration.

- [x] processed status changes to succeeded.

- [x] startedProcessingAt is updated to startedAt.

- [x] processedAt is updated to finishedAt.

- [x] 202 Accepted requests previously returning an updateId are now returning a summarized task object.

- [x] MEILI_MAX_UDB_SIZE env var is updated MEILI_MAX_TASK_DB_SIZE.

- [x] --max-udb-size cli option is updated to --max-task-db-size.

- [x] task object lists are now returned under a results array.

- [x] Each operation on an index (creation, update, deletion) is now asynchronous and represented by a task.

## Todo tech

- [x] Restore Snapshots

- [x] Restore dumps of documents

- [x] Implements the dump of updates

- [x] Error handling

- [x] Fix stats

- [x] Restore the Analytics

- [x] [Add the new analytics](https://github.com/meilisearch/specifications/pull/92/files)

- [x] Fix tests

- [x] ~Deleting tasks when index is deleted (see bellow)~ see #1891 instead

- [x] Improve details for documents addition and deletion tasks

- [ ] Add integration test

- [ ] Test task store filtering

- [x] Rename `UuidStore` to `IndexMetaStore`, and simplify the trait.

- [x] Fix task store initialization: fill pending queue from hard state

- [x] Synchronously return error when creating an index with an invalid index_uid and add test

- [x] Task should be returned in decreasing uid + tests (on index task route)

- [x] Summarized task view

- [x] fix snapshot permissions

## Implementation

### Linked PRs

- #1889

- #1891

- #1892

- #1902

- #1906

- #1911

- #1914

- #1915

- #1916

- #1918

- #1924

- #1925

- #1926

- #1930

- #1936

- #1937

- #1942

- #1944

- #1945

- #1946

- #1947

- #1950

- #1951

- #1957

- #1959

- #1960

- #1961

- #1962

- #1964

### Linked PRs in milli:

- https://github.com/meilisearch/milli/pull/414

- https://github.com/meilisearch/milli/pull/409

- https://github.com/meilisearch/milli/pull/406

- https://github.com/meilisearch/milli/pull/418

### Issues

- close#1687

- close#1786

- close#1940

- close#1948

- close#1949

- close#1932

- close#1956

### Spec patches

- https://github.com/meilisearch/specifications/pull/90

Co-authored-by: Marin Postma <postma.marin@protonmail.com>

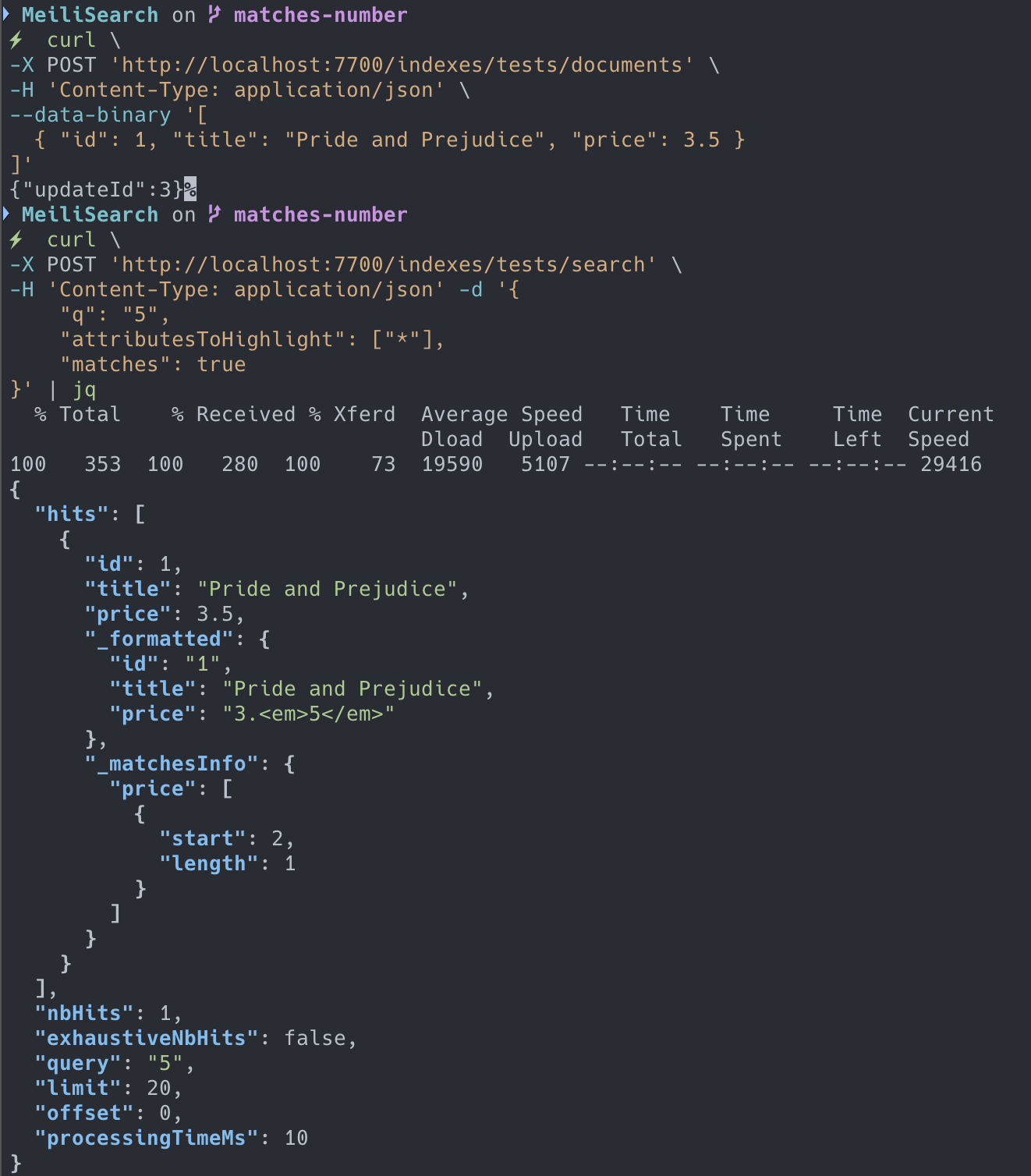

1893: Make matches work with numerical value r=MarinPostma a=Thearas

# Pull Request

## What does this PR do?

Implement #1883.

I have test this PR with unit test. It appears to be working properly:

PTAL `@curquiza`

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Does this PR fix an existing issue?

- [x] Have you read the contributing guidelines?

- [x] Have you made sure that the title is accurate and descriptive of the changes?

Co-authored-by: Thearas <thearas850@gmail.com>

1896: Remove email address from the message at the launch r=irevoire a=curquiza

I suggest removing this email address from the message at the launch since it can encourage people to think this is an email address for support. Is it something we want `@meilisearch/devrel-team` since we mostly redirect them to the forum or the slack?

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1897: Add ARM image for Docker to CI r=irevoire a=curquiza

Fixes#1315

- [x] Publish MeiliSearch's docker image for `arm64`

- [x] Add `workflow_dispatch` event in case we need to re-trigger it after a failure without creating a new release

- [x] Use our own server to run the github runner since this CI is really slow (1h instead of 4h)

- [x] Open an issue for a refactor by merging both files in one file (https://github.com/meilisearch/MeiliSearch/issues/1901)

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1882: Remove release drafter r=curquiza a=curquiza

Remove release drafter since it's not used at the moment due to the specific release process of MeiliSearch.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1878: Add error object in task r=MarinPostma a=ManyTheFish

# Pull Request

## What does this PR do?

Fixes#1877

## PR checklist

Please check if your PR fulfills the following requirements:

- [x] Update error test

- [x] Remove flattening of errors during task serialization

Co-authored-by: many <maxime@meilisearch.com>

1875: Fix search post event and disk size analytics r=irevoire a=gmourier

- Branch POST search on the post_search aggregator

- Use largest disk `total_space` instead of `available_space`

1876: Update SEGMENT_API_KEY r=irevoire a=gmourier

Branch it on our Segment production stack

Co-authored-by: Guillaume Mourier <guillaume@meilisearch.com>

1800: Analytics r=irevoire a=irevoire

Closes#1784

Implements [this spec](https://github.com/meilisearch/specifications/blob/update-analytics-specs/text/0034-telemetry-policies.md)

# Anonymous Analytics Policy

## 1. Functional Specification

### I. Summary

This specification describes an exhaustive list of anonymous metrics collected by the MeiliSearch binary. It also describes the tools we use for this collection and how we identify a Meilisearch instance.

### II. Motivation

At MeiliSearch, our vision is to provide an easy-to-use search solution that meets the essential needs of our users. At all times, we strive to understand our users better and meet their expectations in the best possible way.

Although we can gather needs and understand our users through several channels such as Github, Slack, surveys, interviews or roadmap votes, we realize that this is not enough to have a complete view of MeiliSearch usage and features adoption. By cross-referencing our product discovery phases with aggregated quantitative data, we want to make the product much better than what it is today. Our decision-making will be taken a step further to make a product that users love.

### III. Explanation

#### General Data Protection Regulation (GDPR)

The metrics collected are non-sensitive, non-personal and do not identify an individual or a group of individuals using MeiliSearch. The data collected is secured and anonymized. We do not collect any data from the values stored in the documents.

We, the MeiliSearch team, provide an email address so that users can request the removal of their data: privacy@meilisearch.com.<br>

Thanks to the unique identifier generated for their MeiliSearch installation (`Instance uuid` when launching MeiliSearch), we can remove the corresponding data from all the tools we describe below. Any questions regarding the management of the data collected can be sent to the email address as well.

#### Tools

##### Segment

The collected data is sent to [Segment](https://segment.com/). Segment is a platform for data collection and provides data management tools.

##### Amplitude

[Amplitude](https://amplitude.com/) is a tool for graphing and highlighting collected data. Segment feeds Amplitude so that we can build visualizations according to our needs.

-----------

# The `identify` call we send every hour:

## System Configuration `system`

This property allows us to gather essential information to better understand on which type of machine MeiliSearch is used. This allows us to better advise users on the machines to choose according to their data volume and their use-cases.

- [x] `system` => Never changes but still sent every hours

- [x] distribution | On which distribution MeiliSearch is launched, eg: Arch Linux

- [x] kernel_version | On which kernel version MeiliSearch is launched, eg: 5.14.10-arch1-1

- [x] cores | How many cores does the machine have, eg: 24

- [x] ram_size | Total capacity of the machine's ram. Expressed in `Kb`, eg: 33604210

- [x] disk_size | Total capacity of the biggest disk. Expressed in `Kb`, eg: 336042103

- [x] server_provider | Users can tell us on which provider MeiliSearch is hosted by filling the `MEILI_SERVER_PROVIDER` env var. This is also filled by our providers deploy scripts. e.g. GCP [cloud-config.yaml](56a7c2630c/scripts/providers/gcp/cloud-config.yaml (L33)), eg: gcp

## MeiliSearch Configuration

- [x] `context.app.version`: MeiliSearch version, eg: 0.23.0

- [x] `env`: `production` / `development`, eg: `production`

- [x] `has_snapshot`: Does the MeiliSearch instance has snapshot activated, eg: `true`

## MeiliSearch Statistics `stats`

- [x] `stats`

- [x] `database_size`: Size of indexed data. Expressed in `Kb`, eg: 180230

- [x] `indexes_number`: Number of indexes, eg: 2

- [x] `documents_number`: Number of indexed documents, eg: 165847

- [x] `start_since_days`: How many days ago was the instance launched?, eg: 328

---------

- [x] Launched | This is the first event sent to mark that MeiliSearch is launched a first time

---------

- [x] `Documents Searched POST`: The Documents Searched event is sent once an hour. The event's properties are averaged over all search operations during that time so as not to track everything and generate unnecessary noise.

- [x] `user-agent`: Represents all the user-agents encountered on this endpoint during one hour, eg: `["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]`

- [x] `requests`

- [x] `99th_response_time`: The maximum latency, in ms, for the fastest 99% of requests, eg: `57ms`

- [x] `total_suceeded`: The total number of succeeded search requests, eg: `3456`

- [x] `total_failed`: The total number of failed search requests, eg: `24`

- [x] `total_received`: The total number of received search requests, eg: `3480`

- [x] `sort`

- [x] `with_geoPoint`: Does the built-in sort rule _geoPoint rule has been used?, eg: `true` /`false`

- [x] `avg_criteria_number`: The average number of sort criteria among all the requests containing the sort parameter. "sort": [] equals to 0 while not sending sort does not influence the average, eg: `2`

- [x] `filter`

- [x] `with_geoRadius`: Does the built-in filter rule _geoRadius has been used?, eg: `true` /`false`

- [x] `avg_criteria_number`: The average number of filter criteria among all the requests containing the filter parameter. "filter": [] equals to 0 while not sending filter does not influence the average, eg: `4`

- [x] `most_used_syntax`: The most used filter syntax among all the requests containing the requests containing the filter parameter. `string` / `array` / `mixed`, `mixed`

- [x] `q`

- [x] `avg_terms_number`: The average number of terms for the `q` parameter among all requests, eg: `5`

- [x] `pagination`:

- [x] `max_limit`: The maximum limit encountered among all requests, eg: `20`

- [x] `max_offset`: The maxium offset encountered among all requests, eg: `1000`

---

- [x] `Documents Searched GET`: The Documents Searched event is sent once an hour. The event's properties are averaged over all search operations during that time so as not to track everything and generate unnecessary noise.

- [x] `user-agent`: Represents all the user-agents encountered on this endpoint during one hour, eg: `["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]`

- [x] `requests`

- [x] `99th_response_time`: The maximum latency, in ms, for the fastest 99% of requests, eg: `57ms`

- [x] `total_suceeded`: The total number of succeeded search requests, eg: `3456`

- [x] `total_failed`: The total number of failed search requests, eg: `24`

- [x] `total_received`: The total number of received search requests, eg: `3480`

- [x] `sort`

- [x] `with_geoPoint`: Does the built-in sort rule _geoPoint rule has been used?, eg: `true` /`false`

- [x] `avg_criteria_number`: The average number of sort criteria among all the requests containing the sort parameter. "sort": [] equals to 0 while not sending sort does not influence the average, eg: `2`

- [x] `filter`

- [x] `with_geoRadius`: Does the built-in filter rule _geoRadius has been used?, eg: `true` /`false`

- [x] `avg_criteria_number`: The average number of filter criteria among all the requests containing the filter parameter. "filter": [] equals to 0 while not sending filter does not influence the average, eg: `4`

- [x] `most_used_syntax`: The most used filter syntax among all the requests containing the requests containing the filter parameter. `string` / `array` / `mixed`, `mixed`

- [x] `q`

- [x] `avg_terms_number`: The average number of terms for the `q` parameter among all requests, eg: `5`

- [x] `pagination`:

- [x] `max_limit`: The maximum limit encountered among all requests, eg: `20`

- [x] `max_offset`: The maxium offset encountered among all requests, eg: `1000`

---

- [x] `Index Created`

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `primary_key`: The name of the field used as primary key if set, otherwise `null`, eg: `id`

---

- [x] `Index Updated`

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `primary_key`: The name of the field used as primary key if set, otherwise `null`, eg: `id`

---

- [x] `Documents Added`: The Documents Added event is sent once an hour. The event's properties are averaged over all POST /documents additions operations during that time to not track everything and generate unnecessary noise.

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `payload_type`: Represents all the `payload_type` encountered on this endpoint during one hour, eg: [`text/csv`]

- [x] `primary_key`: The name of the field used as primary key if set, otherwise `null`, eg: `id`

- [x] `index_creation`: Does an index creation happened, eg: `false`

---

- [x] `Documents Updated`: The Documents Added event is sent once an hour. The event's properties are averaged over all PUT /documents additions operations during that time to not track everything and generate unnecessary noise.

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `payload_type`: Represents all the `payload_type` encountered on this endpoint during one hour, eg: [`application/json`]

- [x] `primary_key`: The name of the field used as primary key if set, otherwise `null`, eg: `id`

- [x] `index_creation`: Does an index creation happened, eg: `false`

---

- [x] Settings Updated

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `ranking_rules`

- [x] `sort_position`: Position of the `sort` ranking rule if any, otherwise `null`, eg: `5`

- [x] `sortable_attributes`

- [x] `total`: Number of sortable attributes, eg: `3`

- [x] `has_geo`: Indicate if `_geo` is set as a sortable attribute, eg: `false`

- [x] `filterable_attributes`

- [x] `total`: Number of filterable attributes, eg: `3`

- [x] `has_geo`: Indicate if `_geo` is set as a filterable attribute, eg: `false`

---

- [x] `RankingRules Updated`

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `sort_position`: Position of the `sort` ranking rule if any, otherwise `null`, eg: `5`

---

- [x] `SortableAttributes Updated`

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `total`: Number of sortable attributes, eg: `3`

- [x] `has_geo`: Indicate if `_geo` is set as a sortable attribute, eg: `false`

---

- [x] `FilterableAttributes Updated`

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

- [x] `total`: Number of filterable attributes, eg: `3`

- [x] `has_geo`: Indicate if `_geo` is set as a filterable attribute, eg: `false`

---

- [x] Dump Created

- [x] `user-agent`: Represents the user-agent encountered for this API call, eg: ["MeiliSearch Ruby (2.1)", "Ruby (3.0)"]

---

Ensure the user-id file is well saved and loaded with:

- [x] the dumps

- [x] the snapshots

- [x] Ensure the CLI uuid only show if analytics are activate at launch **or already exists** (=even if meilisearch was launched without analytics)

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: Irevoire <tamo@meilisearch.com>

1852: Add tests for mini-dashboard status and assets r=curquiza a=CuriousCorrelation

## Summery

Added tests for `mini-dashboard` status including assets.

## Ticket link

PR closes #1767

Co-authored-by: CuriousCorrelation <CuriousCorrelation@protonmail.com>

1847: Optimize document transform r=MarinPostma a=MarinPostma

integrate the optimization from https://github.com/meilisearch/milli/pull/402.

optimize payload read, by reading it to RAM first instead of streaming it. This means that the payload must fit into RAM, which should not be a problem.

Add BufWriter to the obkv writer to improve write speed.

I have measured a gain of 40-45% in speed after these optimizations.

Co-authored-by: marin postma <postma.marin@protonmail.com>

1830: Add MEILI_SERVER_PROVIDER to Dockerfile r=irevoire a=curquiza

Add docker information in `MEILI_SERVER_PROVIDER` env variable

It does not impact the telemetry spec since it's an already existing variable used on our side.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1811: Reducing ArmV8 binary build time with action-rs (cross build with Rust) r=curquiza a=patrickdung

This pull request is based on [discussion #1790](https://github.com/meilisearch/MeiliSearch/discussions/1790)

Note:

1) The binaries of this PR is additional to existing binary built

Existing binary would be produced (by existing GitHub workflow/action)

meilisearch-linux-amd64

meilisearch-linux-armv8

meilisearch-macos-amd64

meilisearch-windows-amd64.exe

meilisearch.deb

2) This PR produce these binaries. The name 'meilisearch-linux-aarch64' is used to avoid naming conflict with 'meilisearch-linux-armv8'.

meilisearch-linux-aarch64

meilisearch-linux-aarch64-musl

meilisearch-linux-aarch64-stripped

meilisearch-linux-amd64-musl

3) If it's fine (in next release), we should submit another PR to stop generating meilisearch-linux-armv8 (which could take two to three hours to build it)

Co-authored-by: Patrick Dung <38665827+patrickdung@users.noreply.github.com>

1822: Tiny improvements in download-latest.sh r=irevoire a=curquiza

- Add check on `$latest` to check if it's empty. We have some issue on the swift SDK currently where the version number seems not to be retrieved, but we don't why https://github.com/meilisearch/meilisearch-swift/pull/216

- Replace some `"` by `'`

- Rename `$BINARY_NAME` by `$binary_name` to make them consistent with the other variables that are filled all along the script

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1824: Fix indexation perfomances on mounted disk r=ManyTheFish a=ManyTheFish

We were creating all of our tempfiles in data.ms directory, but when the database directory is stored in a mounted disk, tempfiles I/O throughput decreases, impacting the indexation time.

Now, only the persisting tempfiles will be created in the database directory. Other tempfiles will stay in the default tmpdir.

Co-authored-by: many <maxime@meilisearch.com>

1813: Apply highlight tags on numbers in the formatted search result output r=irevoire a=Jhnbrn90

This is my first ever Rust related PR.

As described in #1758, I've attempted to highlighting numbers correctly under the `_formatted` key.

Additionally, I added a test which should assert appropriate highlighting.

I'm open to suggestions and improvements.

Co-authored-by: John Braun <john@brn.email>

1801: Update milli version to v0.17.3 to fix inference issue r=curquiza a=curquiza

Fixes#1798

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1793: Remove memmap dependency r=curquiza a=palfrey

Fixes#1792. I was going to replace with [memmap2](https://github.com/RazrFalcon/memmap2-rs) which should be a drop-in replacement, but I couldn't actually find anything that actually directly used it. It ends up being a dependency in [milli](https://github.com/meilisearch/milli) so I'm going to go there next and fix that.

Co-authored-by: Tom Parker-Shemilt <palfrey@tevp.net>

1783: Fix too many open file error r=ManyTheFish a=ManyTheFish

- prepare_for_closing() function wasn't called when an index is deleted, we are now calling it

- Index wasn't deleted in the case where we couldn't insert `uid` in `index_uuid_store`, we are now cleaning it

Fix#1736

Co-authored-by: many <maxime@meilisearch.com>

1781: Optimize build size r=irevoire a=MarinPostma

Remove debug symbols from the release build, and strip the binaries.

We used to need to debug symbols for sentry, but since it was removed with #1616, we don't need them anymore.

Shrinks the binary size from ~300MB to ~50MB on linux.

Co-authored-by: mpostma <postma.marin@protonmail.com>

1769: Enforce `Content-Type` header for routes requiring a body payload r=MarinPostma a=irevoire

closes#1665

Co-authored-by: Tamo <tamo@meilisearch.com>

- remove the payload_error_handler in favor of a PayloadError::from

- merge the two match branch into one

- makes the accepted content type a const instead of recalculating it for every error

1763: Index tests r=MarinPostma a=MarinPostma

This pr aims to test more thorougly the usage on index in the meilisearch database, by writing unit tests.

work included:

- [x] Create index mock and stub methods

- [x] Test snapshot creation

- [x] Test Dumps

- [x] Test search

Co-authored-by: mpostma <postma.marin@protonmail.com>

1768: Fix auth error r=irevoire a=MarinPostma

fix a small auth error, that set the invalid token error token to "hello". This was invilisble to the user because the invalid token is not returned.

thank you hawk-eye `@irevoire`

Co-authored-by: mpostma <postma.marin@protonmail.com>

1755: Fix mini dashboard r=curquiza a=anirudhRowjee

This commit is a fix to issue #1750.

As a part of the changes to solve this issue, the following changes have

been made -

1. Route registration for static assets has been modified

2. the `mut` keyword on the `scope` has been removed.

Co-authored-by: Anirudh Rowjee <ani.rowjee@gmail.com>

This commit is a fix to issue #1750.

As a part of the changes to solve this issue, the following changes have

been made -

1. Route registration for static assets has been modified

2. the `mut` keyword on the `scope` has been removed.

1747: Add new error types for document additions r=curquiza a=MarinPostma

Adds the missing errors for the documents routes, as specified.

close#1691close#1690

Co-authored-by: mpostma <postma.marin@protonmail.com>

1746: Do not commit transaction on failed updates r=irevoire a=Kerollmops

This PR fixes MeiliSearch that was always committing the transactions even when an update was invalid and the whole transaction should have been trashed. It was the source of a bug where an invalid update (with an invalid primary key) was creating an index with the specified primary key and should instead have failed and done nothing on the server.

Fixes#1735.

Co-authored-by: Kerollmops <clement@meilisearch.com>

1742: Create dumps v3 r=irevoire a=MarinPostma

The introduction of the obkv document format has changed the format of the updates, by removing the need for the document format of the addition (it is not necessary since update are store in the obkv format). This has caused breakage in the dumps that this pr solves by introducing a 3rd version of the dumps.

A v2 compat layer has been created that support the import of v2 dumps into meilisearch. This has permitted to move the compat code that existed elsewhere in meiliearch to be moved into the v2 module. The asc/desc patching is now only done for forward compatibility when loading a v2 dump, and the v3 write the asc/desc to the dump with the new syntax.

Co-authored-by: mpostma <postma.marin@protonmail.com>

1697: Make exec binary for M1 mac available for download r=irevoire a=k-nasa

## Why

fix: https://github.com/meilisearch/MeiliSearch/issues/1661

Now, Do not supported getting exec file for m1 mac on using`download-latest.sh`.

## What

Download x86 binary when run `download-latest.sh` on m1 mac, because it can execute binary targeting x86.

## Proof

I verified like this.

I got executable binary on M1 mac 💡

```sh

:) % arch

arm64

:) % ./download-latest.sh

Downloading MeiliSearch binary v0.21.1 for macos, architecture amd64...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 631 100 631 0 0 2035 0 --:--:-- --:--:-- --:--:-- 2035

100 43.5M 100 43.5M 0 0 7826k 0 0:00:05 0:00:05 --:--:-- 9123k

MeiliSearch binary successfully downloaded as 'meilisearch' file.

Run it:

$ ./meilisearch

Usage:

$ ./meilisearch --help

:) % file ./meilisearch

./meilisearch: Mach-O 64-bit executable x86_64

:) % ./meilisearch --help # this is execuable

meilisearch-http 0.21.1

...

...

```

Co-authored-by: k-nasa <htilcs1115@gmail.com>

Co-authored-by: nasa <htilcs1115@gmail.com>

1748: Add a link to join the cloud-hosted beta r=MarinPostma a=gmourier

The product team would like to add a link to communicate and invite users to fill out the form to test the closed beta of our cloud solution.

We have done the same thing on the documentation side https://github.com/meilisearch/documentation/pull/1148. 😇

Co-authored-by: Guillaume Mourier <guillaume@meilisearch.com>

1739: Fix add document Content-Type r=curquiza a=MarinPostma

change the `Content-Type` guards of the document addition routes to match the specification.

Co-authored-by: mpostma <postma.marin@protonmail.com>

1711: MeiliSearch refactor introducing OBKV format r=MarinPostma a=MarinPostma

This PR refactor some multiple components of meilisearch, and introduce the obkv document format to meilisearch

- [x] Split meilisearch-http and meilisearch-lib

- [x] Replace `IndexActor` and `UuidResolver` with `IndexResolver`

- [x] Remove mentions to Actor

- [x] Remove Actor traits to simplify code

- [x] Integrate obkv document format

- [x] Remove `Data`

- [x] Restore all route

- [x] Replace `Box<dyn error>` with `anyhow::Error`

- [x] Introduce update file store

- [x] Update file store error handling

- [x] Fix dumps

- [x] Fix snapshots

- [x] Fix tests

- [x] Update module documentation

- [x] add csv suppport (feat `@ManyTheFish` #1729 )

- [x] add jsonl support

- [x] integrate geosearch (feat `@irevoire` #1725)

partially implements #1691 and #1690. The error handling is very basic now, I will finish it in the next pr.

Some unit tests have been disabled, I will re-enable them ASAP, but they need a bit more work.

close#1531

P.S: sorry for this monstrous PR :'(

Co-authored-by: mpostma <postma.marin@protonmail.com>

Co-authored-by: Tamo <tamo@meilisearch.com>

Co-authored-by: many <maxime@meilisearch.com>

1703: Trigger CodeCoverage manually instead of on each PR r=irevoire a=curquiza

Since no one is using it now on the PRs, we would rather get a state of the code coverage once (triggered manually) rather than on each PR.

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1724: Redo CONTRIBUTING.md r=curquiza a=curquiza

- Update `Development` section

- Update the `Git Guidelines` section

- Remove `Benchmarking & Profiling` -> done on the milli side at the moment

- Remove `Humans` -> synchronization job done by the manager of the core team at the moment

- Remove `Changelog` section -> done by the manager and the docs team

- Remove `Documentation` section -> job done by the manager to synchronize both teams.

Fixes#1723 at the same time

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1659: deps: unify pest dependency r=MarinPostma a=happysalada

meilisearch dependends on two different versions of pest.

This can be problematic for some build systems (e.g. NixOS).

Since the repo hasn't received an update in a while, in the meantime, use the later version of the two pest dependencies.

Context: this has been discussed previously https://github.com/meilisearch/MeiliSearch/issues/1273

meilisearch has been selected by ngi to be packaged for nixos. A patch can be applied to make the changes proposed in this PR. This PR intends to see how the maintainers of meilisearch would feel about the patch.

What was done.

- Add an override for the pest dependency in Cargo.toml.

- recreate the Cargo.lock with `cargo update`. This has had the side effect of updating some dependencies.

I ran the tests on darwin. My machine is quite old so I had 8 failures due to a timeout. None of the failures look like they are due to the new dependencies.

Checking the pest repo, it seems there are some recent commits, however no sure date of when there could be a new release.

If this gets accepted, there is no need to do a new release, nixos can just target the new commit.

If you feel it's too much pain for not enough gain, no worries at all!

Co-authored-by: happysalada <raphael@megzari.com>

1692: Use tikv-jemallocator instead of jemallocator r=curquiza a=felixonmars

`jemallocator` has been abandoned for nearly two years, and `rustc`

itself moved to use `tikv-jemallocator` instead:

3965773ae7

Let's switch to a better maintained version.

Co-authored-by: Felix Yan <felixonmars@archlinux.org>

`jemallocator` has been abandoned for nearly two years, and `rustc`

itself moved to use `tikv-jemallocator` instead:

3965773ae7

Let's switch to a better maintained version.

1651: Use reset_sortable_fields r=Kerollmops a=shekhirin

Resolves https://github.com/meilisearch/MeiliSearch/issues/1635

1676: Add curl binary to final stage image r=curquiza a=ook

Reference: #1673

Changes: * add `curl` binary to final docker Melisearch image.

For metrics, docker funny layer management makes this add a shrink from 319MB to 315MB:

```

☁ MeiliSearch [feature/1673-add-curl-to-docker-image] ⚡ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

getmeili/meilisearch 0.22.0_ook_1673 938e239ad989 2 hours ago 315MB

getmeili/meilisearch latest 258fa3aa1230 6 days ago 319MB

```

1684: bump dependencies r=MarinPostma a=MarinPostma

Bump meilisearch dependencies.

We still depend on custom patch that have been upgraded along the way.

Co-authored-by: Alexey Shekhirin <a.shekhirin@gmail.com>

Co-authored-by: Thomas Lecavelier <thomas@followanalytics.com>

Co-authored-by: mpostma <postma.marin@protonmail.com>

1682: Change the format of custom ranking rules when importing old dumps r=curquiza a=Kerollmops

This PR changes the format of the custom ranking rules from `asc(price)` to `title:asc` as the format changed between v0.21 and v0.22. The dumps are now correctly importing the custom ranking rules.

This PR also change the previous default ranking rules (without sort) to the new default ranking rules (with the new sort).

Co-authored-by: Kerollmops <clement@meilisearch.com>

1669: Fix windows integration tests r=MarinPostma a=ManyTheFish

Set max_memory value to unlimited during tests:

because tests run several meilisearch in parallel,

we overestimate the value for max_memory making the tests on Windows crash

Co-authored-by: many <maxime@meilisearch.com>

1658: Remove COMMIT_SHA and COMMIT_DATE build arg from the Docker CIs r=irevoire a=curquiza

Since `@irevoire` add the `.git` folder in the Dockerfile, no need to compute `COMMIT_SHA` and `COMMIT_DATE` in the CI.

Can you confirm `@irevoire?`

Also, update some CIs using `checkout@v1` to `checkout@v2`

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1652: Remove dependabot r=MarinPostma a=curquiza

Fixes#1649

Dependabot for vulnerability and security updates is still activated.

1654: Add Script for Windows r=MarinPostma a=singh08prashant

fixes#1570

changes:

1. added script for detecting windows os running git bash

2. appended `.exe` to `$release_file` for windows as listed [here](https://github.com/meilisearch/MeiliSearch/releases/)

3. removed global `$BINARY_NAME='meilisearch'` as windows require `.exe` file

1657: Bring vergen hotfix from `stable` to `main` r=MarinPostma a=curquiza

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

Co-authored-by: singh08prashant <singh08prashant@gmail.com>

Co-authored-by: Kerollmops <clement@meilisearch.com>

Co-authored-by: bors[bot] <26634292+bors[bot]@users.noreply.github.com>

1656: Remove unused Arc import r=MarinPostma a=Kerollmops

This PR removes a warning introduced by #1606 which removed Sentry that was using an `Arc` but forgot to remove the scope import, we remove it here.

Co-authored-by: Kerollmops <clement@meilisearch.com>

1636: Hotfix: Log but don't panic when vergen can't retrieve commit information r=curquiza a=Kerollmops

This pull request fixes an issue we discovered when we tried to publish meilisearch v0.21 on brew, brew uses the tarball downloaded from github directly which doesn't contain the `.git` folder.

We use the `.git` folder with [vergen](https://docs.rs/vergen) to retrieve the commit and datetime information. Unfortunately, we were unwrapping the vergen result and it was crashing when the git folder was missing.

We no more panic when vergen can't find the `.git` folder and just log out a potential error returned by [the git2 library](https://docs.rs/git2). We then just check that the env variables are available at compile-time and replace it with "unknown" if not.

### When the `.git` folder is available

```

xh localhost:7700/version

HTTP/1.1 200 OK

Content-Type: application/json

Date: Thu, 26 Aug 2021 13:44:23 GMT

Transfer-Encoding: chunked

{

"commitSha": "81a76eab69944de8a8d5006345b5aec7b02acf50",

"commitDate": "2021-08-26T13:41:30+00:00",

"pkgVersion": "0.21.0"

}

```

### When the `.git` folder is unavailable

```bash

cp -R meilisearch meilisearch-cpy

cd meilisearch-cpy

rm -rf .git

cargo clean

cargo run --release

<snip>

Compiling meilisearch-http v0.21.0 (/Users/clementrenault/Documents/meilisearch-cpy/meilisearch-http)

warning: vergen: could not find repository from '/Users/clementrenault/Documents/meilisearch-cpy/meilisearch-http'; class=Repository (6); code=NotFound (-3)

```

```

xh localhost:7700/version

HTTP/1.1 200 OK

Content-Type: application/json

Date: Thu, 26 Aug 2021 13:46:33 GMT

Transfer-Encoding: chunked

{

"commitSha": "unknown",

"commitDate": "unknown",

"pkgVersion": "0.21.0"

}

```

Co-authored-by: Kerollmops <clement@meilisearch.com>

1605: Fix pacic when decoding r=curquiza a=curquiza

Update milli to fix the panic during document deletion

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1533: Update milli version to v0.8.0 r=MarinPostma a=curquiza

- Update milli, heed and obkv

- fix relevancy issue and the `facetsDistribution` display

Co-authored-by: Clémentine Urquizar <clementine@meilisearch.com>

1528: Update of the Date Time Format in commitDate r=MarinPostma a=irevoire

Since we were relying on a [super old version of `vergen`](https://docs.rs/crate/vergen/3.0.1), we could not get the `commit timestamp`, so I updated `vergen` to the latest version.

This also allows us to remove all the features we don't use.

closes#1522

Co-authored-by: Tamo <tamo@meilisearch.com>

1521: Sentry was never sending anything r=Kerollmops a=irevoire

@Kerollmops noticed that we had no log of this release in sentry, and it look like I badly tested my code after ignoring the “No space left on device” errors.

Now it should be fixed.

Co-authored-by: Tamo <tamo@meilisearch.com>

1498: Show the filterable and not the faceted attributes in the settings r=Kerollmops a=Kerollmops

Fixes#1497

Co-authored-by: Clément Renault <clement@meilisearch.com>

1484: Add MeiliSearch version to issue template r=irevoire a=bidoubiwa

It is relevant to know the version of MeiliSearch before any other additional information that might be important to know.

We could also reduce the number of required information asked to the user. I would like to suggest the following:

Instead of the section of `Desktop` and `Smartphone` I would just improve the last section

```

**Additional context**

Additional information that may be relevant to the issue.

[e.g. architecture, device, OS, browser]

```

By applying this, the template final look will be the following:

-----

**Describe the bug**

A clear and concise description of what the bug is.

**To Reproduce**

Steps to reproduce the behavior:

1. Go to '...'

2. Click on '....'

3. Scroll down to '....'

4. See error

**Expected behavior**

A clear and concise description of what you expected to happen.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**MeiliSearch version:** [e.g. v0.20.0]

**Additional context**

Additional information that may be relevant to the issue.

[e.g. architecture, device, OS, browser]

Co-authored-by: Charlotte Vermandel <charlottevermandel@gmail.com>

1481: fix bug in index deletion r=Kerollmops a=MarinPostma

this bug was caused by a heed iterator entry being deleted while still holding a reference to it.

close#1333

Co-authored-by: mpostma <postma.marin@protonmail.com>

1478: refactor routes r=irevoire a=MarinPostma

refactor the route directory, so the module tree follows the route structure

Co-authored-by: mpostma <postma.marin@protonmail.com>

1457: Hotfix highlight on emojis panic r=Kerollmops a=ManyTheFish

When the highlight bound is in the middle of a character

or if we are out of bounds, we highlight the complete matching word.

note: we should enhance the tokenizer and the Highlighter to match char indices.

Fix#1368

Co-authored-by: many <maxime@meilisearch.com>

1456: Fix update loop timeout r=Kerollmops a=Kerollmops

This PR fixes a wrong fix of the update loop introduced in #1429.

Co-authored-by: Kerollmops <clement@meilisearch.com>

When the highlight bound is in the middle of a character

or if we are out of bounds, we highlight the complete matching word.

note: we should enhance the tokenizer and the Highlighter to match char indices.

Fix#1368

259: Run rustfmt one the whole project and add it to the CI r=curquiza a=irevoire

Since there is currently no other PR modifying the code, I think it's a good time to reformat everything and add rustfmt to the ci.

Co-authored-by: Tamo <tamo@meilisearch.com>

258: Use rustls instead of openssl r=curquiza a=irevoire

I also removed all the `default-features` of reqwest since we are only using the JSON one.

Fix#255

Co-authored-by: Tamo <tamo@meilisearch.com>

246: Stop logging the no space left on device error r=curquiza a=irevoire

closes#208

@qdequele what do you think of that?

Are there any other errors we need to ignore?